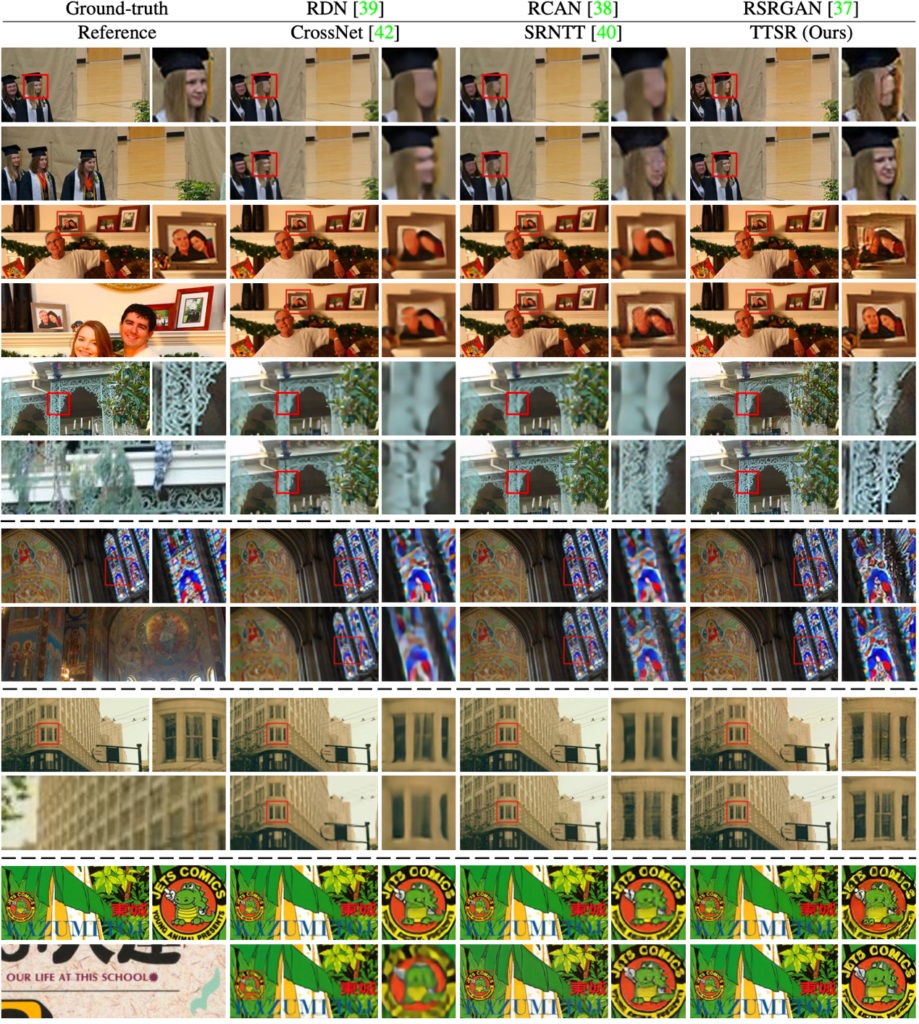

By leveraging deep learning tools, the Microsoft Research team including Bo Zhang and Ziyu Wan propose a triplet domain translation network. The technology combines real images with massive synthetic image pairs. Two variational autoencoders (VAEs) can separate clean photos and old photos into latent spaces. AI will then learn these two spaces with synthetic paired data. However, the team says solving degradation on old photos required a complex system that starts with learning texture transformer network for image super-resolution. Image super-resolution (SR) helps to find and restore natural textures from a degraded image and input it to a high-resolution double. Microsoft says previous models have often resulted in blurry effects and hallucinations in the image.

New Texture Transformer

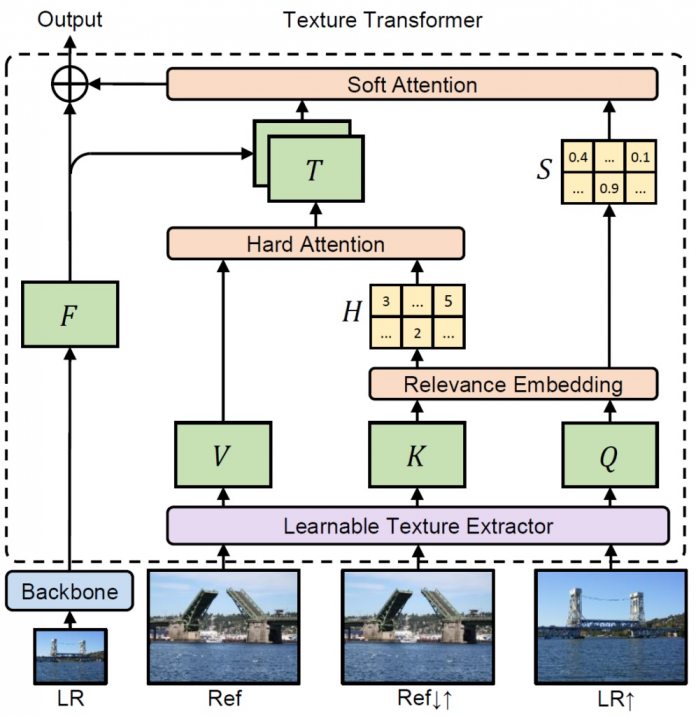

However, Microsoft Research proposes a reference-based image super-resolution (RefSR) or the image SR field: “RefSR approaches utilize information from a high-resolution image, which is similar to the input image, to assist in the recovery process. The introduction of a high-resolution reference image transforms the difficult texture generation process to a simple texture search and transfer, which achieves significant improvement in visual quality.” Microsoft is using a new Texture Transformer Network for Image Super-Resolution (TTSR). With this tool, researchers can search and transfer high-res texture data for low-res input. The novel texture transformer detailed by Microsoft Research Asia comes with four parts: the learnable texture extractor (LTE), the relevance embedding module (RE), the hard-attention module for feature transfer (HA) and the soft-attention module for feature synthesis (SA). “With the emergence of deep learning, one can address a variety of low-level image restoration problems by exploiting the powerful representation capability of convolutional neural networks, that is, learning the mapping for a specific task from a large amount of synthetic images.” It is worth checking out Microsoft’s full research blog which details how LTE, RE, HA, and SA function within the new texture transformer.